Deploying Multimodal AI into Clinical Care

Analyzing Hidden Patterns To Transform Cancer Care

Ever wonder why two seemingly identical cancer patients respond completely differently to the same treatment?

An interesting new study in Nature Cancer just revealed how AI can decode these hidden patterns—and it's about to change everything.

Not Just Better, But Significantly Better

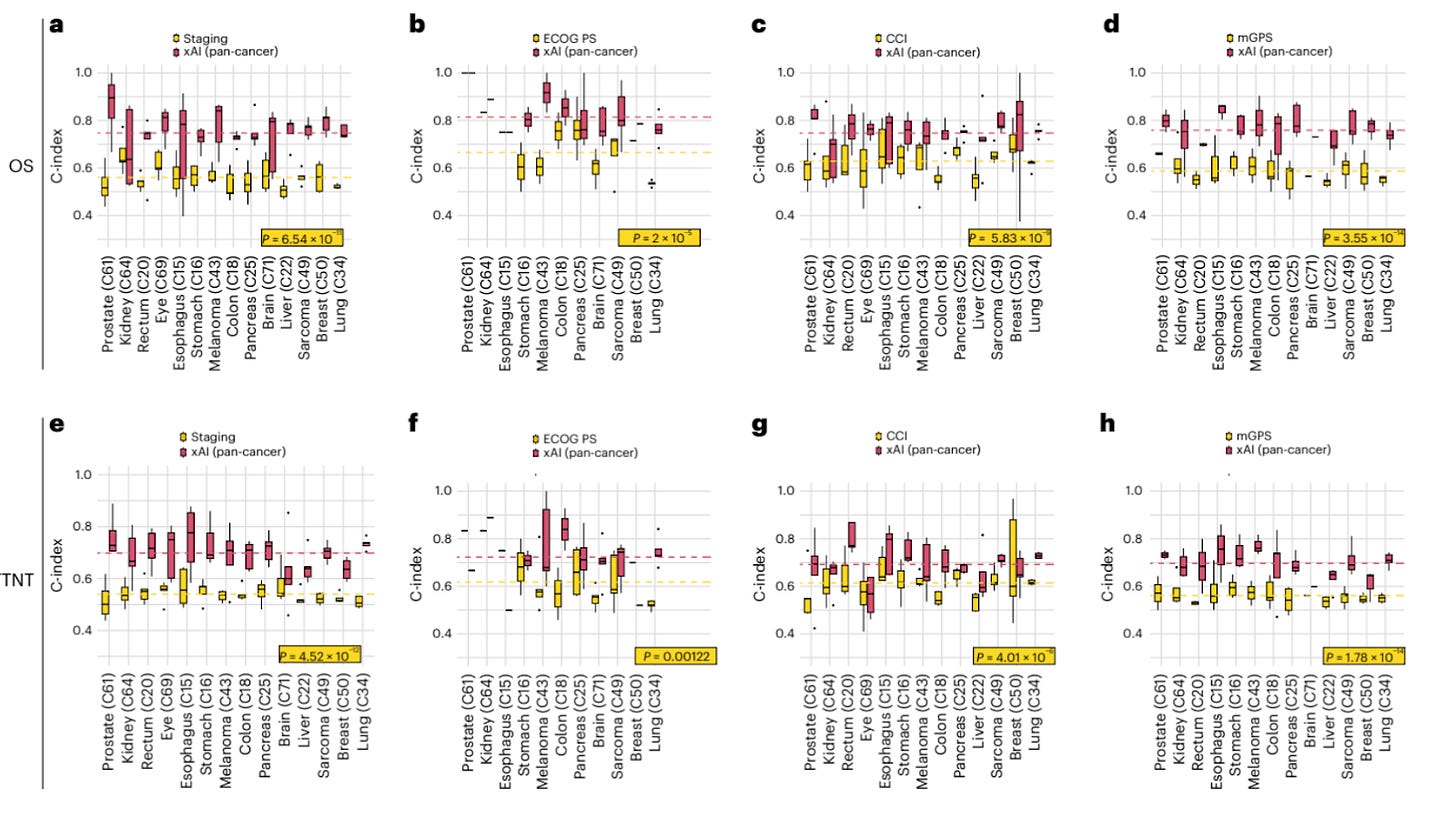

Look at Figure 3 from the study (p.5).

Their AI model outperformed standard clinical tools by margins that could redefine cancer care:

34% better than UICC staging (0.75 vs. 0.56, P < 0.001)

21% better than ECOG Performance Status (0.81 vs. 0.67, P < 0.001)

19% better than Charlson Comorbidity Index (0.75 vs. 0.63, P < 0.001)

This isn't just statistical significance. These are potential second chances for actual patients waiting on treatment decisions today.

Multimodal Context Matters

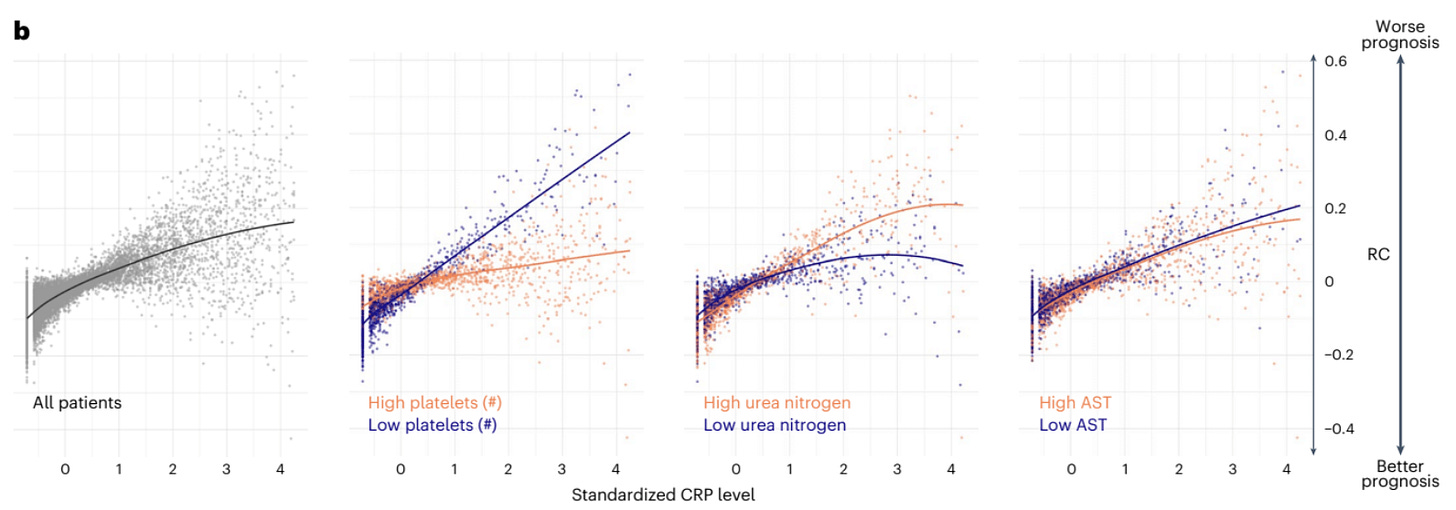

The most compelling insight from this research appears in Figure 4B revealing how C-Reactive Protein (CRP) – a common inflammatory marker routinely used to assess prognosis – varies dramatically in its significance depending on other factors.

Normally, elevated CRP levels have been linked to increased risk of death and reduced survival time but when analyzed in context with platelet counts, a patient’s prognosis could be vastly different such as:

High CRP + low platelets = significantly elevated risk

High CRP + high platelets = substantially lower risk

These relationships exist whether we see them or not. AI simply makes them visible and can present this at the palm of every physician’s hand.

"But We Need More Evidence" They Say

I hear it already: “We need to see more evidence” OR "Healthcare isn't Google Maps. We need to see clinical trial results before we implement this AI."

This skepticism misses something fundamental.

Traditional clinical trials test whether an intervention works better than standard care. They're designed to evaluate drugs, devices, and procedures.

AI tools are different. They're not interventions themselves—they're intelligence amplifiers that help clinicians make better decisions with existing data.

Instead of asking "Should AI replace clinical judgment?"

We should ask ourselves: "Are we comfortable continuing to make decisions without seeing all the patterns in our data for each specific patient case?"

What would you want for your family?

Would you feel comfortable knowing a treatment decision was based on evidence that worked for a general population but might not apply to your specific situation?

Personally, I am always wondering “Am I similar to the studied population in that clinical trial? Are those results relevant for me?”

The uncomfortable truth? Most patients exist in this clinical gray zone—similar enough to studied populations to apply the findings, yet different enough that may put those outcomes into question.

What we need isn't just more data—we need smarter systems that account for these similarities and differences, empowering doctors to make truly personalized decisions.

Our health deserves more than statistical approximation.

The Google Maps Analogy - More Apt Than You Think

Google Maps doesn't drive your car. It gives you better information and processes real-time information like weather, current traffic and recalibrates based on new information. Having this tool at your fingertips allows you to make the best driving decisions to reach your destination.

Similarly, clinical AI doesn't treat patients. It provides deeper insights so clinicians can make more informed choices based on numerous multimodal data elements.

Would you demand a randomized controlled trial before using Google Maps? Would you want that Google Maps system to be static or process information as new data emerges?

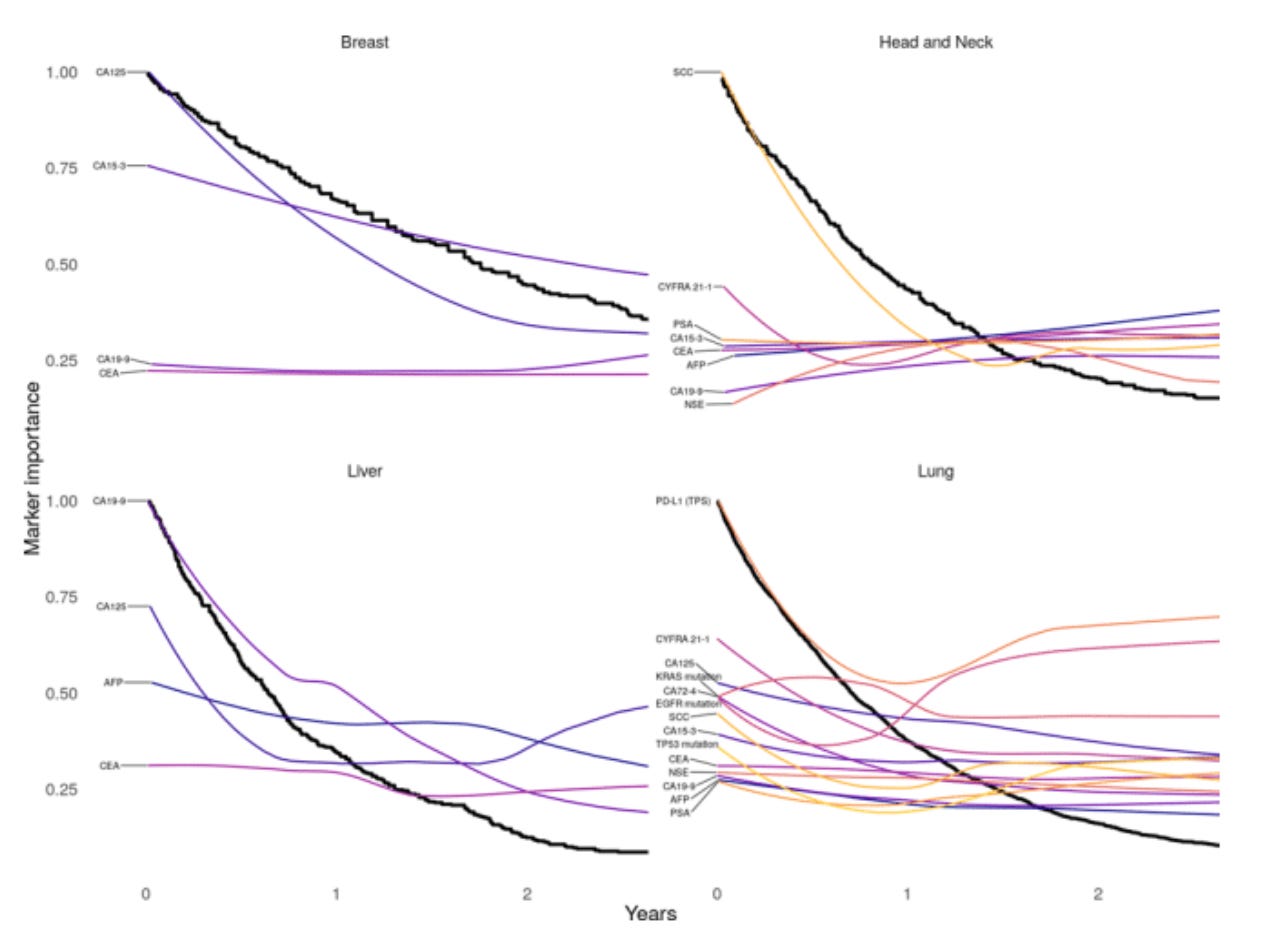

The paper highlights how the relative importance of markers changes over a patient's cancer journey:

In lung cancers, tumor-specific markers like EGFR mutations and PD-L1 expression shift in importance as the disease evolves. Sometimes what matters most at diagnosis becomes secondary later in treatment.

No static model could capture this dynamic reality, so we need systems to be able to adapt to new information as its generated.

Moving Forward Responsibly

The path forward isn't blind implementation—it's requires thoughtful integration.

While the paper highlights tremendous opportunities, the form factor needs some refinement. The Clinician guide in Fig 5 is overwhelming even for someone like me who analyzes multimodal data for a living.

Before bedside implementation, we need:

Validate predictions against real-world outcomes

Ensure explainability so clinicians understand the "why" behind recommendations

Design user-friendly interfaces to turn information into actionable insight

Monitor for bias and continuously improve

Measure impacts on actual patient outcomes

The Bottom Line

After years at this intersection of healthcare and technology, I'm convinced: multimodal AI in healthcare isn't a question of if, but when.

The choice isn't between perfect certainty and reckless implementation. It's between leveraging all available data patterns and deliberately ignoring them.

Are we ready to see the full picture? Our patients deserve nothing less.

What's your experience with multimodal data integration in healthcare? How is your organization balancing innovation and caution?

#PrecisionMedicine #HealthcareAI #FutureOfMedicine

If you found this post helpful, please share or subscribe to read more about the intersection of AI x Precision Medicine.