Ever sat in a room where pathology, genomics, radiology and clinical experts talk past each other instead of finding shared insights?

This disconnect isn't just frustrating—i’ve seen it first hand in clinical, research and drug development settings. The worst part? It's costing us treatment opportunities and impacting patient outcomes.

I’ve been building AI solutions in precision medicine for a decade and I constantly feel the need to stress this point: we need to stop chasing dreams thinking one modality will rule them all.

Instead, we need to solve the data problem by bridging real-world multimodal data at scale. Sounds easy, right?

Well, the latest paper from Mass General Brigham and Harvard covers this topic quite well, titled: "Modeling Dense Multimodal Interactions Between Biological Pathways and Histology for Survival Prediction".

The Digital Pathology Reality Check

After implementing numerous AI solutions in healthcare settings, I've developed healthy skepticism toward any approach claiming comprehensive insights from a single data modality.

Take Digital pathology for instance. It has transformative potential—but it's just one piece of an intricate puzzle.

The hard truth: Expecting AI to predict complex treatment responses or long-term outcomes from a single histology slide is asking a lot, regardless of how sophisticated our algorithms become.

Let's be pragmatic. Whole slide images (WSIs) provide extraordinary spatial resolution of tumor morphology, but they can't directly reveal:

Underlying driver mutations

Pathway activation status

Immune microenvironment dynamics

Treatment history effects

As the authors themselves note on page 1: "WSIs represent a very high-dimensional spatial description of a tumor, while bulk transcriptomics represent a global description of gene expression levels within that tumor."

This isn't a limitation—it's reality. And acknowledging this reality opens the door to what actually works.

The Multimodal Promise (That's Actually Delivering)

This is where the paper represents something genuinely different from typical AI hype.

What makes this research exciting isn't just technology—it's philosophy.

SURVPATH tackles a fundamental integration challenge most AI systems conveniently sidestep, connecting visual patterns from histology with molecular pathways driving disease progression.

Figure 2 (p.3): SURVPATH's ingenious three-step approach bridges molecular and morphological worlds by converting complex transcriptomics into interpretable "pathway tokens" that can "converse" with histology features.

The researchers created "pathway tokens" that encode specific biological functions—transforming abstract molecular data into interpretable units that can "talk to" histology features in a shared computational framework.

This isn't incremental improvement. It's rethinking how we represent biological knowledge within AI systems.

The Early Fusion Difference

Here's where it gets interesting for anyone serious about precision medicine applications.

What particularly excites me about this work is the commitment to early fusion methods—a significant departure from conventional approaches that delivers measurable performance gains. If you’re wondering what Early Fusion methods are and need a crash course (linked here)

As the researchers discovered (page 6):

"Early fusion methods (MCAT, MOTCat and SURVPATH) outperform all late fusion methods. We attribute this observation to the creation of a joint feature space that can model fine-grained interactions between transcriptomics and histology tokens."

Most existing models use late fusion (analyzing modalities separately then combining conclusions)—essentially running parallel analyses that only meet at the finish line.

SURVPATH implements early fusion that explicitly models cross-modal relationships from the beginning, yielding a remarkable 7.3% performance improvement over leading models.

Think about what this means practically: we're moving from "here's what the image shows + here's what the molecular data shows" to "here's how molecular pathways and visual patterns directly interact."

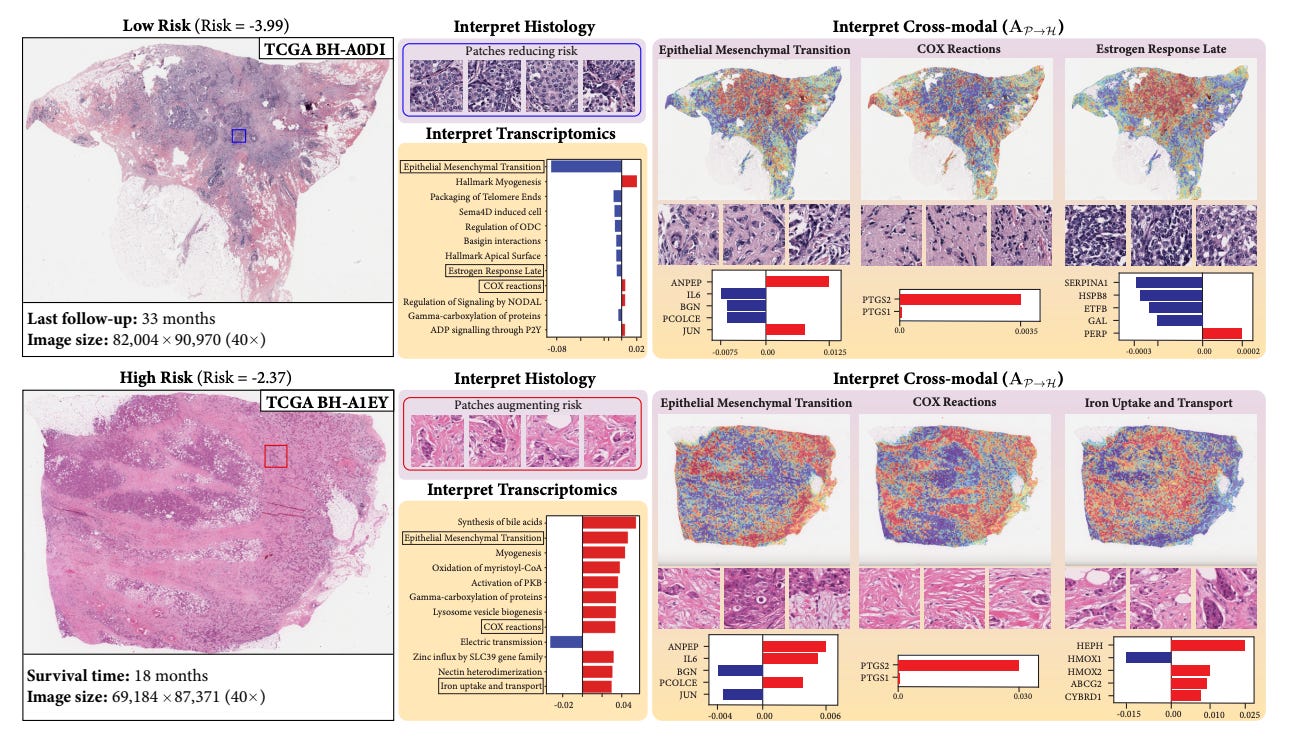

Figure 3 (p.8): SURVPATH's multi-level interpretability reveals how specific biological pathways like Epithelial-Mesenchymal Transition correlate with distinct morphological features in breast cancer, with red indicating risk-increasing features and blue showing protective elements.

Where Smart Implementation Creates Real Value

Companies like Tempus have expansive multimodal datasets (I’m biased of course) to help translate this type of research into clinical impact but there other useful datasets as well.

Just make sure you are using source data as inputs. Datasets are like food: organic, straight-from-the-source is better than highly processed stuff. Without source files, your AI model might be doomed from the get-go.

Regardless of data sources, implementation requires thoughtful integration:

Start with targeted applications where benefits clearly outweigh integration costs

Design systems assuming with the most multimodal data that is routinely available from day one

Prioritize interpretability that enhances rather than mystifies clinical decision-making

I've seen too many promising approaches fail at implementation. The difference between academic promise and clinical reality often comes down to practical integration constraints.

What This Means for Drug Development Teams Right Now

For those in pharmaceutical development, early fusion approaches unlock capabilities that were previously theoretical:

Mechanism visualization: Directly see how tissue morphology correlates with pathway activation

Biomarker Predictions: Identify integrated visual-molecular signatures and predict features with smultimodal datasets at scale

Stratification improvement: Find responder subgroups invisible to single-modality approaches

The approach demonstrated in Figure 1 on page 1 shows how SURVPATH visualizes multimodal interactions between biological pathways and morphological patterns—exactly the kind of interpretable insight needed to accelerate translational research.

The Path Forward

After years in this field, I've learned that breakthrough technology only matters when it solves real clinical and research problems.

Digital pathology isn't a universal solution, but early fusion approaches like SURVPATH represent genuine steps forward in how we integrate complementary data streams while maintaining clinical interpretability.

The future belongs to teams who understand the unique value contribution of each modality and design integration points that enhance rather than obscure that value.

What multimodal approaches are you implementing or evaluating? Which integration methods have you found most effective in bridging clinical and molecular insights?

#PrecisionMedicine #AI #MultimodalData #DrugDiscovery #ComputationalPathology

If you found this post helpful, please share or subscribe to read more about the intersection of AI x Precision Medicine.

Heartily agree... but what about temporal dimensions... or are current ai methods sufficient to capture behaviour over time

What’s your opinion on Futurehouse’s Robin and their news about dry AMD candidate drug?